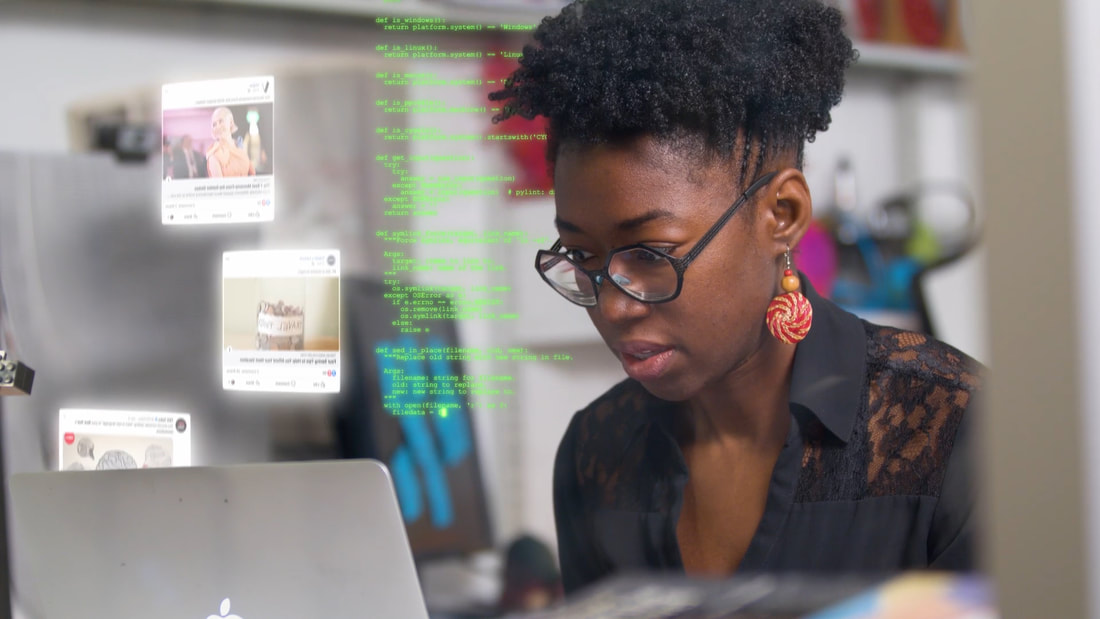

The film opens with Joy Buolamwimi, a black woman who is a computer scientist at MIT, explaining how she tried to make a fun interactive mirror, but the camera couldn’t recognize her face. When she put on a white mask, the algorithm in the camera instantly recognized her. The software couldn’t see her real face because it had been taught what white men’s faces looked like. This leads her on a journey into the algorithms that control so many aspects of our life but are built on inequalities and biases. The film escalates from there, layering new scenarios on each concept until viewers feel genuine terror and despair at our lives being in the hands of these algorithms. Through a series of talking head interviews, illustrations, and documentary footage, this film is educational yet passionate and well-explained. A key aim is to make sure people understand the potential future implications of allowing companies to develop biased programs. This isn’t just fear-mongering. It’s a clear explanation of why we need to regulate algorithms that have the power to affect our lives to ensure that they are truly fair. Particularly important to the director Shalini Kantayya (2015’s “Catching the Sun”) is the representation of women and people of color. Many of the film’s examples are about black women in particular, as the group most likely to be negatively affected by such software. Algorithms decide things like whether people are accepted for loans, college placement, and access to health care or housing. These devices are assumed to be fair because they’re just computers. But if programmers feed them data that reflects existing biases, algorithms only learn to replicate or increase those biases. Amazon.com, for instance, discovered that the company’s recruitment tool excluded all female applicants because the vast majority of people for a particular position were men. A few real-life scenarios are used as object lessons to bring these concepts to life. A facial recognition camera mistakenly identifies a 14-year-old black boy, who feels shaken after police search and question him. These cameras are not accurate enough to rely on, especially for people of color who are more likely to be racially profiled by police. Even at the more benign end of the scale, algorithms tailor advertisements online based on people’s perceived interests. The more affluent may see ads for items to buy. On the other side of the coin are predatory ads targeting the poor. People with gambling problems are pushed toward betting websites, or people with money problems see ads for extortionate payday loans. These real-life examples make for a hard-hitting documentary that demonstrates how this impacts audiences personally. One risk with educational, issue-led documentaries is that they leave viewers feeling lectured to, helpless, or frustrated. “Coded Bias” ends on a positive note, but it also definitely touches a nerve. It links inequalities of gender, race, and class with the people who benefit from building algorithms for profit without any regulation. It would have been interesting to hear the perspective of developers who are trying to do good. Although the film mentions changes and improvements that tech companies are making, we don’t hear from any of them directly. Are these changes substantive, or are they merely reacting to public pressure? Overall, “Coded Bias” makes viewers question things they previously assumed to be safe. Where AI starts to make decisions about our lives without any human intervention, we should challenge it. Where unreliable facial recognition software sends police to our neighborhoods, we should resist. Although this isn’t always possible, perhaps we can take solace in the fact that there are passionate and intelligent experts fighting for regulation and fairness of AI at the highest levels. Comments are closed.

|

AuthorHi, I'm Caz. I live in Edinburgh and I watch a lot of films. My reviews focus mainly on women in film - female directors or how women are represented on screen. Archives

December 2021

Categories

All

|

RSS Feed

RSS Feed